X is simply the space the managerial class can't control

I want to preface this piece with a concession that RNZ’s reporting has improved a lot lately. Their articles are more fulsome and usually present a variety of perspectives. Their online written reporting is on the whole very good, but unfortunately their radio content is not and hiring John Campbell will make things worse. Private media companies like Stuff and NZME regularly republish RNZ articles to the extent that I wonder why the Herald in particular doesn’t fire all of their journalists and just syndicate RNZ content.

The articles I discuss here are not good examples of the quality of RNZ’s reporting. They are indulgences in reflecting the meta-narratives of the leftist managerial class.

RNZ wants you to believe its Grok explainer is about protecting women and children from AI abuse. This is patently nonsense, but before sympathisers start squealing let me show you just how disingenuous it is. I will show you this is about power, narrative control, and punishing the one platform that no longer obeys the political and institutional consensus.

We have entered a time of immense uncertainty thanks to rapid adoption of AI by just about everyone. AI will transform the way we do business, how we use our brains, and bring great advancements in technology and knowledge. It will also mess up our job markets, cause inevitable chaos, and be used by sickos to do weird and awful things.

AI is already being used to create non-consensual sexualised deepfakes. This is a real concern and I am pleased to see Laura McClure’s member’s bill addressing this has been pulled from the Biscuit Tin and will be debated in Parliament.

However, in RNZ’s article, the framing is not about stopping that harm, it is about constructing a moral justification for going after X.

This is not an “X problem.” It is a generative-AI problem. The ability to manipulate images into nude or sexualised forms exists across the entire AI ecosystem. ChatGPT-powered tools, Google’s Gemini, Claude, Midjourney, Stable Diffusion and Photoshop-style editors all operate on the same underlying capabilities. The difference is not whether the technology exists, but how well it is policed and what it is used for. RNZ’s article pretends Grok invented this abuse and is alone in having sickos use the tools to be inappropriate. Readers of the article would have no clue how wide spread and accessible this tech is. Instead they will likely have concluded that Grok, X, and Elon Musk are unique deviants that should be banned.

It all began with a burst of Grok users generating AI “bikini” and “undressing” images, a crude but hardly novel misuse of generative technology. From that spark, a full-scale political and media panic was manufactured, complete with calls for bans, government investigations, and ominous talk about whether X should even be allowed to operate. A few thousand tasteless prompts suddenly justified rewriting the rules of the internet. Instead of treating it as what it actually was; a moderation and safety failure to be fixed, the same way OpenAI, Google, and every other AI company handles misuse.

It is important to note that there is no evidence that Grok produced full nude images. The inappropriate fakes were limited to bikini shots. But it was instantly inflated into a morality play about Elon Musk, free speech, and the supposed degeneracy of an entire platform. A narrow technical problem was turned into a cultural crusade, because it was never really about bikinis or even deepfakes. It was all about seizing a politically convenient excuse to attack the one major social network that the powers that be cannot control.

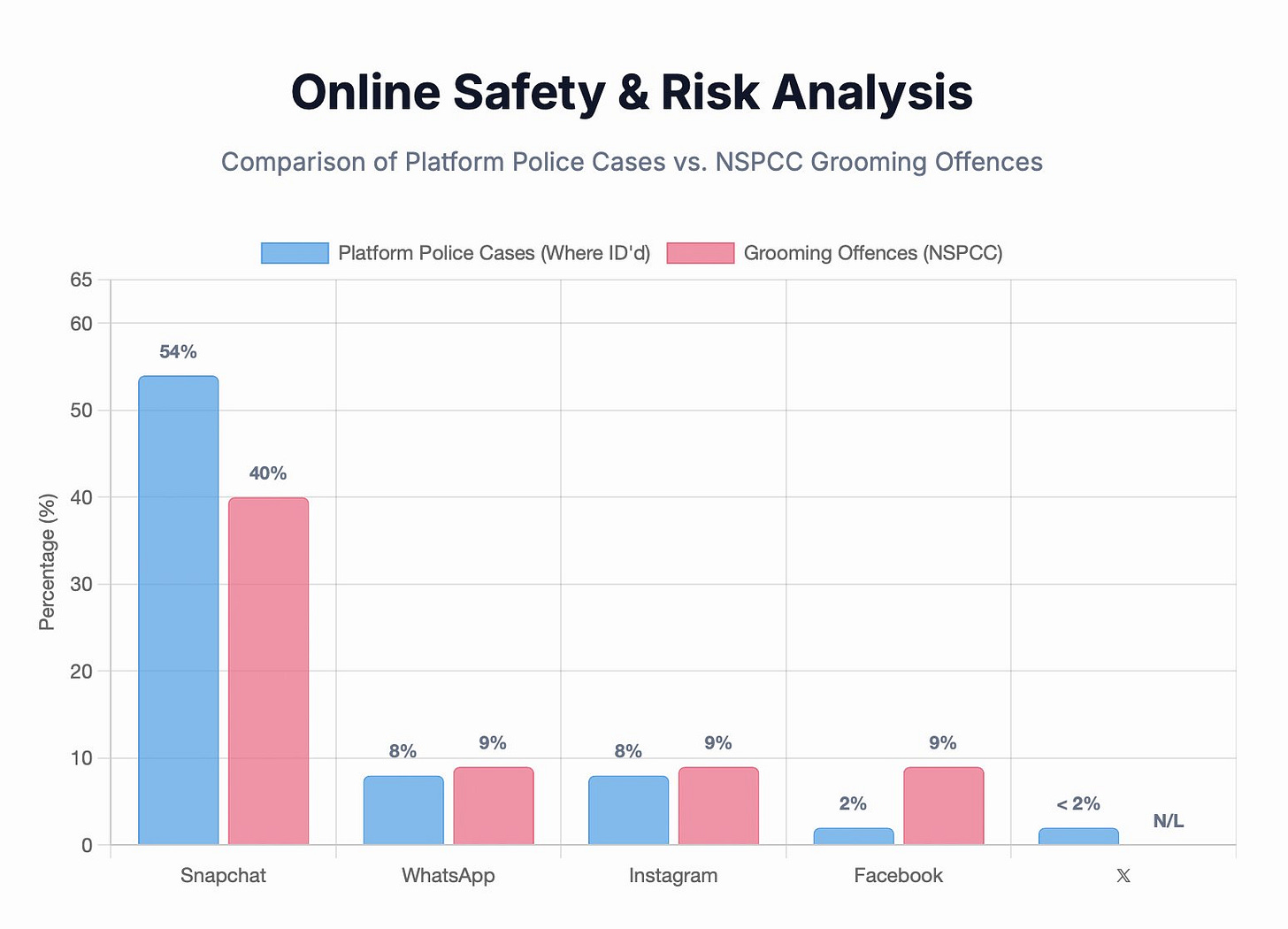

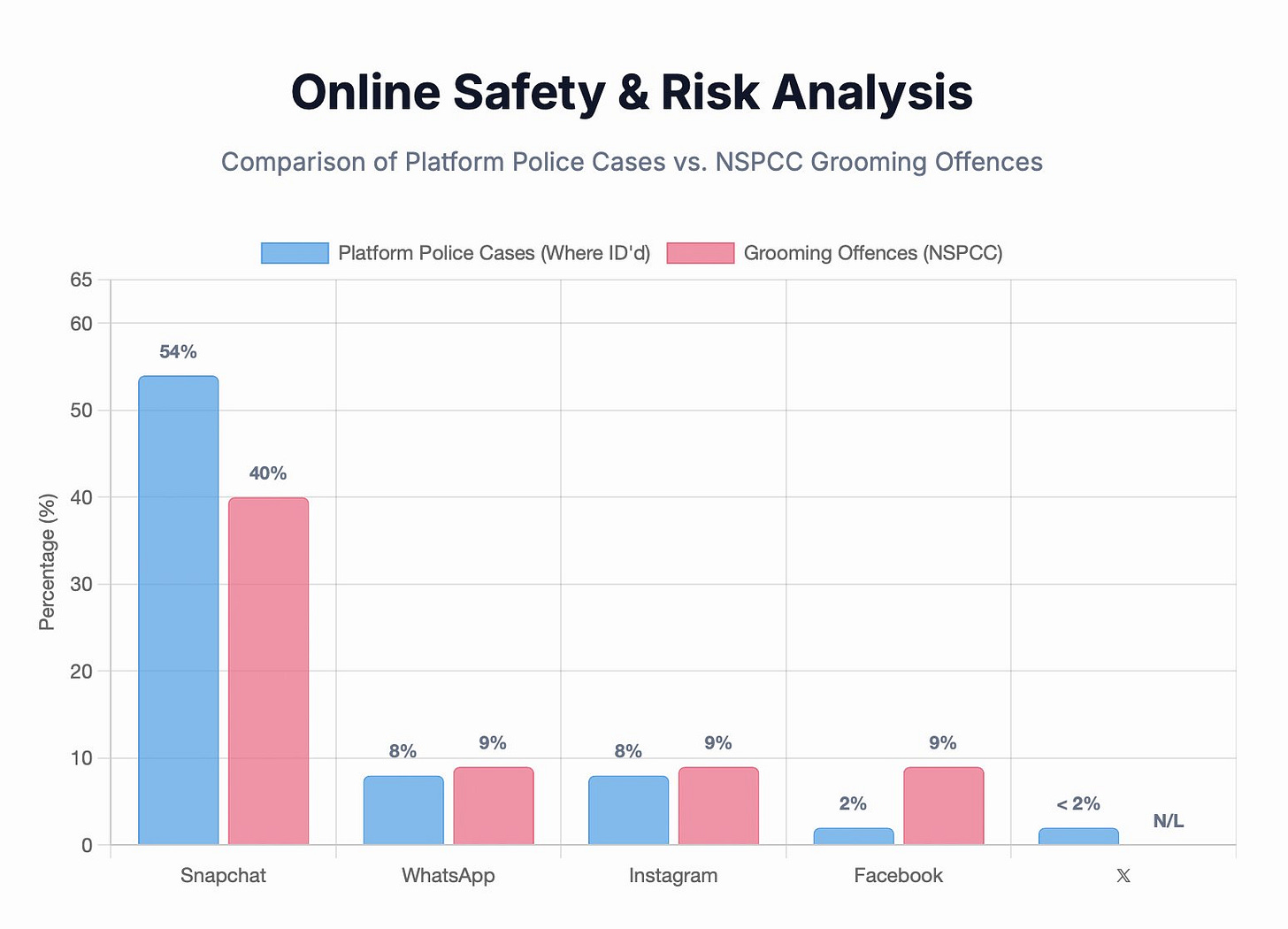

The really infuriating thing is that if this story were truly about protecting children, X would not be the target. Grooming and sextortion overwhelmingly occur on platforms built around private messaging and youth audiences such as Snapchat, Instagram, WhatsApp and TikTok. These platforms repeatedly appear in law-enforcement and child-protection data as the primary sites of abuse. X, by contrast, is largely public-facing, adult-skewed, and hostile to covert grooming networks. That does not make it perfect, but it makes it a strange scapegoat if the goal is actually to reduce child exploitation.

UK Prime Minister Keir Starmer was the primary culprit of kicking this whole attack on X off with his claims that women and children are “not safe” on X. But the UK’s own police data shows that in 2024, England and Wales recorded 51,672 online child sexual exploitation and abuse offences, and 54% were linked to Snapchat alone, with WhatsApp and Instagram each accounting for around 8%. By contrast, X barely even registers in the figures not even making 1%.

The National Society for the Prevention of Cruelty to Children (NSPCC) reports the same pattern, with around 40% of sexual communication and grooming offences tied to Snapchat, yet there is no comparable political or media crusade against the platform that dominates children’s daily digital lives. Given X operates some of the strictest child-safety enforcement in the industry, reporting illegal material directly to authorities, removing thousands of posts and banning hundreds of accounts every week, and constantly tightening its detection systems, this does not look like a serious child-protection matter.

Click to view

Furthermore, Musk and xAI did not “ignore” the Grok problem. After the backlash to the bikini images, they restricted image-editing functions, tightened prompts, blocked certain outputs and restated clearly that illegal content, especially involving minors, is prohibited.

Elon Musk posted yesterday:

We have entered a time of immense uncertainty thanks to rapid adoption of AI by just about everyone. AI will transform the way we do business, how we use our brains, and bring great advancements in technology and knowledge. It will also mess up our job markets, cause inevitable chaos, and be used by sickos to do weird and awful things.

AI is already being used to create non-consensual sexualised deepfakes. This is a real concern and I am pleased to see Laura McClure’s member’s bill addressing this has been pulled from the Biscuit Tin and will be debated in Parliament.

However, in RNZ’s article, the framing is not about stopping that harm, it is about constructing a moral justification for going after X.

This is not an “X problem.” It is a generative-AI problem. The ability to manipulate images into nude or sexualised forms exists across the entire AI ecosystem. ChatGPT-powered tools, Google’s Gemini, Claude, Midjourney, Stable Diffusion and Photoshop-style editors all operate on the same underlying capabilities. The difference is not whether the technology exists, but how well it is policed and what it is used for. RNZ’s article pretends Grok invented this abuse and is alone in having sickos use the tools to be inappropriate. Readers of the article would have no clue how wide spread and accessible this tech is. Instead they will likely have concluded that Grok, X, and Elon Musk are unique deviants that should be banned.

It all began with a burst of Grok users generating AI “bikini” and “undressing” images, a crude but hardly novel misuse of generative technology. From that spark, a full-scale political and media panic was manufactured, complete with calls for bans, government investigations, and ominous talk about whether X should even be allowed to operate. A few thousand tasteless prompts suddenly justified rewriting the rules of the internet. Instead of treating it as what it actually was; a moderation and safety failure to be fixed, the same way OpenAI, Google, and every other AI company handles misuse.

It is important to note that there is no evidence that Grok produced full nude images. The inappropriate fakes were limited to bikini shots. But it was instantly inflated into a morality play about Elon Musk, free speech, and the supposed degeneracy of an entire platform. A narrow technical problem was turned into a cultural crusade, because it was never really about bikinis or even deepfakes. It was all about seizing a politically convenient excuse to attack the one major social network that the powers that be cannot control.

The really infuriating thing is that if this story were truly about protecting children, X would not be the target. Grooming and sextortion overwhelmingly occur on platforms built around private messaging and youth audiences such as Snapchat, Instagram, WhatsApp and TikTok. These platforms repeatedly appear in law-enforcement and child-protection data as the primary sites of abuse. X, by contrast, is largely public-facing, adult-skewed, and hostile to covert grooming networks. That does not make it perfect, but it makes it a strange scapegoat if the goal is actually to reduce child exploitation.

UK Prime Minister Keir Starmer was the primary culprit of kicking this whole attack on X off with his claims that women and children are “not safe” on X. But the UK’s own police data shows that in 2024, England and Wales recorded 51,672 online child sexual exploitation and abuse offences, and 54% were linked to Snapchat alone, with WhatsApp and Instagram each accounting for around 8%. By contrast, X barely even registers in the figures not even making 1%.

The National Society for the Prevention of Cruelty to Children (NSPCC) reports the same pattern, with around 40% of sexual communication and grooming offences tied to Snapchat, yet there is no comparable political or media crusade against the platform that dominates children’s daily digital lives. Given X operates some of the strictest child-safety enforcement in the industry, reporting illegal material directly to authorities, removing thousands of posts and banning hundreds of accounts every week, and constantly tightening its detection systems, this does not look like a serious child-protection matter.

Click to view

Furthermore, Musk and xAI did not “ignore” the Grok problem. After the backlash to the bikini images, they restricted image-editing functions, tightened prompts, blocked certain outputs and restated clearly that illegal content, especially involving minors, is prohibited.

Elon Musk posted yesterday:

Note: With NSFW enabled, Grok is supposed allow upper body nudity of imaginary adult humans (not real ones) consistent with what can be seen in R-rated movies on Apple TV.

That is the de facto standard in America. This will vary in other regions according to the laws on a country by country basis.

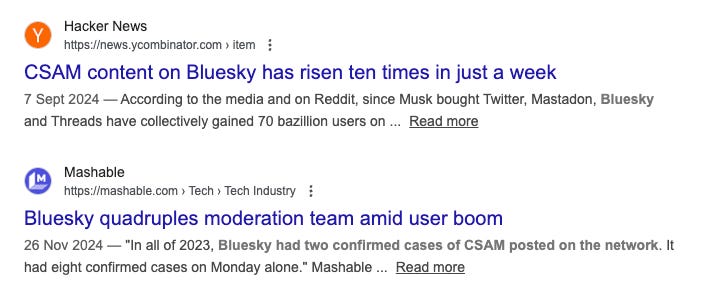

This has been a particularly aggressive attack on Musk and his platforms, but it is not new. Since his purchase of Twitter, the left have done their best to discredit, smear, and bring down the site. It has become a status proving act to leave the site and a particular flex to force one’s workplace to leave. We have seen the result of this across the usual leftist spheres of media, academia, and public service in New Zealand. Department after department in our government has quietly stopped using X and started using BlueSky. This despite having substantial existing audiences on the former and no audience on the latter. They are desperate to make BlueSky “a thing”. Even though it has an admitted CSAM problem and is like a twenty-first century version of Mao’s Red Army’s round ups.

These decisions are not made for the good of the taxpayers or to benefit New Zealanders. They are solely actions of public servants wanting to virtue signal to their peers and get vengeance on the world’s wealthiest man and friend of the ‘Bad Orange Man’. I have taken to posting on X every missing person I see reported on in the New Zealand media because I know that our media and official police accounts choose not to use the platform most effective at spreading information.

The left despise Elon Musk and his commitment to free speech. But X remains one of the world’s most important real-time news networks. It is where stories break, eyewitnesses post, scandals surface, and official narratives are challenged. And that is why bureaucracies and media organisations utterly loathe it.

Without X we would all be much easier to control. We would have access only to the information they want us to have. This Grok controversy is ultimately a coordinated weaponisation of the ugly aspects of AI that are present on platforms of all kinds. Starmer, Carney, Albo, and leftists in general, want to bring X to heel. “Child safety” is the pretext, but the goal is control.

Now let’s look at RNZ’s article more closely:

Headline: “Grok deepfakes and why NZ is still using Elon Musk’s X”

Hardly a neutral headline, it introduces the premise that New Zealand’s continued use of X is something that needs explaining. As if it is shameful, odd, suspect, or behind the times. The article is set up from the outset as if the default moral position is to leave X, and the only open question is why some holdouts haven’t complied.

RNZ: “X has been in the line of fire over Grok AI deepfakes”

Immediately, RNZ is signalling to the reader: this is the naughty platform currently being scolded by the ‘Correct People’. It frames criticism as inherently legitimate rather than something to be tested.

RNZ: “has led some to call for a boycott”

“Some” can be ten people on Bluesky having one of their frequent performative pile-ons. RNZ doesn’t name or quantify it, doesn’t identify whether it’s a fringe campaign or coordinated advocacy, and doesn’t test whether boycotts reduce harm or just relocate the conversation to platforms journalists personally prefer.

RNZ: “some left-leaning political parties have mostly abandoned it.”

There’s the flashing neon sign that says this is a political matter, not a safety matter. Given that the headline has already questioned why the country has not stopped using X, this positions the left wing parties as the good and moral parties who have “done the right thing.” There is no discussion about why they left and if it is related to inappropriate content or the fact that they were exposed to Community Notes on X.

RNZ: “users could edit an image to create deepfake nude or partially clothed images.”

As I have said, this is possible using virtually any AI tool and there has been no evidence of full nudity being created by Grok. The abuse of AI predates Grok by years. RNZ deliberately doesn’t place X and Grok in the broader ecosystem of generative AI misuse. It treats it as an X-shaped moral failure, which is exactly the rhetorical move required to justify pushes for politicians to “do something about X.”

RNZ: “The company soon said it limited the image function to paid subscribers”

RNZ can’t decide if the story is “X did nothing” or “X reacted but imperfectly.” It also pretends that paid subscribers are able to produce illegal content, because that is what nude content is. As Musk says below, Grok “will refuse to produce anything illegal”.

Click to view

RNZ: “blocked by Indonesia and Malaysia”

This is accurate in broad terms, but RNZ uses it as a kind of “international condemnation” appeal, as if those governments are our north star for free speech norms. “Some states blocked it” is not automatically “and therefore NZ should consider doing something similar.”

This is just peer pressure with flags. Should we also ban yellow clothing at protests (Malaysia), kissing in public (Indonesia), saying “Allah” if you are not Muslim (Malaysia), sex outside of marriage (Indonesia), or chanting during yoga (Malaysia)?

RNZ: "I have been informed this morning that X is acting to ensure full compliance with UK law," UK Prime Minister Sir Keir Starmer told parliament today

As any gender critical feminist can tell you, “compliance” is not synonymous with “protecting women and children.” It also doesn’t let on that it was never in breach of UK law. Across the world legal systems are grappling with how to regulate AI. I personally am opposed to the idea of people being allowed to create sexualised AI images using the likeness of real people. However, in New Zealand we do not yet have such a law.

RNZ has intentionally framed up the UK context as if it is a narrow fight by a horrified Prime Minister seeking to protect women and children from the indecency of AI bikini images. Really this is tangled up in Starmer’s tanking popularity, the regularity with which his disingenuous tweets are corrected by Community Notes, and the fact that his government has arrested more than 12,000 people for saying things online.

RNZ: “New Zealand has been described as ‘lagging behind’ … needs enforceable standards.”

Who said that? The journalist herself? An advocacy organisation? Compared to what? Which jurisdictions? Which legal frameworks? In actual fact, the whole world is trying to navigate this time of adjustment.

And if we are considered to be lagging behind the UK in policing speech and use of the internet that is probably a good thing. We don’t actually want to be throwing people in prison for offensive tweets.

RNZ: “Brown said that X has lost its usefulness to many people and that some New Zealand accounts have been leaving the platform.”

New Zealand has never had widespread use of Twitter/X. However, it has been an important place for political and news discussion. X remains, nonetheless, the number one news app in the New Zealand App Store. Russell Brown may be expressing an opinion, but it serves to bolster the narrative that something is wrong with X and leaving is the right thing.

RNZ:"That's the tragedy of it. The old Twitter had come to fill a kind of important niche for those kind of institutional accounts and it was a way to reach the public quickly with information. And now you're asking the public to wade through a sewer to get there. It's broken now."

“Sewer” is definitely an opinion from Mr Brown. Every major platform has its fair share of harmful content. Even BlueSky, the left’s favoured alternative, has admitted it has had to report large volumes of confirmed child sexual abuse material to authorities. That is not because BlueSky is uniquely evil, though judging by the hourly struggle sessions, it is extraordinarily toxic, it is because predators exist on all platforms. Declaring X uniquely filthy is a lie.

In any case what is the measure of “usefulness”? How is “broken” defined? If we are talking about New Zealand institutions and the efficacy of getting information to New Zealanders, does he mean reach, engagement, service delivery, emergency communications, content prevalence, or simply “I don’t like the people on there”?

Click to view

RNZ: “Rights Aotearoa put out an open letter to Justice Minister Paul Goldsmith calling for regulation of X”

Rights Aotearoa is one deeply unstable man posting on BlueSky and writing copious letters to officials. That is, when he is not busy dragging women into court and attempting to weaponise the Harmful Digital Communications Act.

By the way, here is a selection of things this expert on human rights and dignity has posted online about me:

Click images to view - More on this here.

RNZ: “He said Grok’s image generation raised concerns about image-based sexual abuse, child safety and gendered and identity-based harm. “The non-consensual creation and potential distribution of intimate imagery constitutes a form of sexual violence with severe psychological and reputational harm to victims.”

His assessment of non-consensual sexual content as awful is correct, but, again, Grok never created nudes and is not the only one who can create bikini images. His quotes are not contextualised although they do sandwich a couple of excellent quotes by the Free Speech Union’s Jillaine Heather. RNZ includes Heather’s comments in a nod to balance, but they fail to achieve it by using Rights Aotearoa’s comments to argue with her and as the authoritative voice. Never mind that he has the credibility of a litigious grifter who treats court filings like fan fiction.

MP Laura McClure is quoted after Rights Aotearoa and her comments are excellent too:

"I think like many social media companies, X is grappling with how to handle its AI tools. Tech companies can move faster than regulators, they can listen to their users, and in X's case it appears there are already changes to the way X handles requests for the bikini images."

RNZ positions one side as “human rights” (implicitly good) and the other as “free speech” (implicitly selfish or ideological). But the issue here is the question of who gets to decide what adults can access, and how far governments can go in deplatforming whole services. That is a human rights question, RNZ just allocates “human rights” branding to the side that prefers tighter controls.

The article concludes with a final condemnation of X from Brown and reference to the"enshittification of the internet”. This linked to another RNZ opinion piece although this one was better signposted. “Enshittification” is less an analysis of the internet than a nostalgic lament for the brief era when Twitter felt “good” to a very specific class of people… you know, the ones whose politics and institutions were culturally dominant during Covid.

It uses Cory Doctorow’s monopoly framework as a moral costume to relitigate Elon Musk’s takeover as a civilisational decline rather than a shift in power.

The author opens by conceding Twitter was always a “hellscape” unless you curated it (eg filtered out what offended your sensibilities), then immediately turns Musk into a pantomime villain: “fascist-curious,” “algorithm changed to reflect his views,” “fired moderators,” “full of gore/porn/scams,” all asserted without comparative metrics or proof. The fact that people didn’t actually “flee overnight” is reinterpreted as users being “trapped” in a Hotel California of “misplaced desires” rather than the simpler explanation that X remains useful for news and public argument.

His accusations of “gore/porn/scams” are not reflected in the aforementioned police data. RNZ focuses on Grok bikini edits and moralised “sewer” language, but it never takes the obvious journalistic step of investigating real world harm. On that front, the available UK data (via NSPCC analysis) points heavily to other platforms. If he wants to see hell, he might like to interrogate groomer central: SnapChat.

The broader thesis is true in parts as all platforms do tend to degrade as they monetise and consolidate, but the essay’s logic is incoherent when it applies technofeudalism rhetoric to X, which is not an operating system, app store, search monopoly, or gatekeeper in the way Doctorow’s best examples (Amazon, Apple, Google) are. X is a platform with of competitive substitutes and people demonstrably can and do choose to leave.

Finally, the proposed “fix” (competition, regulation, interoperability, tech-worker power) is presented as liberation, but in this context it reads like a blueprint for reinstitutionalising control. His ideas are stapled onto a Musk-hate narrative and a romanticised memory of the “good” internet that conveniently coincides with maximal deference to authorities. In other words, the piece is about reasserting the cultural-managerial class’s right to decide what “good” looks like, and X is singled out because it is the most visible symbol of that class losing its grip.

I too have called X (and Twitter before it) a “hellscape”. Like the entire internet, and indeed anything involving social interaction between humans, X can be utterly dire. I often ponder on the cost/benefit analysis of the internet in general and usually conclude that on balance it has brought a great deal of good in terms of opening up the world and providing pathways to access unregulated information.

The difference between myself and RNZ’s two articles is that I see regulation, beyond that which is necessary to avoid total anarchy, as a negative. I don’t want the managerial class to control the flow of information and decide what the masses are capable of consuming. The deinstitutionalised internet comes with a plethora of problems, but it also allows us to fact check those in power and navigate around official narratives designed to manipulate and coerce. This is why I defend X so fiercely. Without it we would be in the dark and vulnerable to a strict diet of government-approved information only.

The silliest thing of all is that X’s algorithms are light touch compared to the mammoth Meta ones. Everyone is able to curate their experience pretty effectively by engaging with content they want to see more of. They can also block and mute those they don’t want to hear from with ease. I often assist particularly furious people suffering from Ani Derangement Syndrome to locate the Unfollow and Block buttons. I personally see no porn on X, but that is because I don’t go looking for it and don’t engage with it. Because I engage heavily with news, however, I see plenty of gore… the same gore published by the media.

The articles miss the elephant in the room that many institutions and left-leaning ecosystems turned on X after Musk because X became less governable, less responsive to social pressure campaigns, less aligned with NGO censorship pipelines, and less likely to quietly comply with “misinformation” claims that are often just politically inconvenient speech. RNZ gestures at reputational damage, but it doesn’t interrogate whether “reputational damage” is sometimes just “the righteous people” are angry they can’t steer the conversation anymore and find it more difficult to conduct their struggle sessions and cancellations anymore.

Ani O'Brien comes from a digital marketing background, she has been heavily involved in women's rights advocacy and is a founding council member of the Free Speech Union. This article was originally published on Ani's Substack Site and is published here with kind permission.

7 comments:

I don’t understand why Ani is going after the messenger here. The power sits with the billionaire tech lunatic Musk, who himself is backed by the US lunatic president. The power does not sit with a small non-commercial news org. Musk has put out software with dangerous bugs. Bugs which are exploited by the worst of society to harm children, and vulnerable people. It would be irresponsible for RNZ not to inform the public of this. As we have seen with the manage my health debacle, buggy software is everywhere and CEOs don’t care enough to do anything about it. Ani should be asking questions about why this is allowed to happen, but here she is ignoring the real substance and filling up column inches with a diatribe against the little guy who has done nothing wrong. Concerning.

X is great.

Thank you Ani for pointing out that the Ardernistas, entrenched in their lucrative public service non-jobs, are really mad at X for pointing out their deficiencies. Keep up your really outstandingly good work.

Xitter is a cesspit.

Musk is a poster boy for immigrants causing harm.

Musk put out software that lets people take clothes off other people from photos, and you’re going after RNZ?! As a conservative I am abhorred at what Elon Musk did. Those who fail to condemn this are no more conservative than Arlo Guthrie!

Looks like the left have found BV and aren't taking any prisoners.

Anyone supporting RNZ are well and truly captured.

Keep up the good work Ani.

Post a Comment

Thank you for joining the discussion. Breaking Views welcomes respectful contributions that enrich the debate. Please ensure your comments are not defamatory, derogatory or disruptive. We appreciate your cooperation.